October 28, 2015

Imagine a scenario in the not-so-distant future in which self-driving cars become the norm. A crowd of pedestrians suddenly appears on the road, but if the car swerves to avoid them, it will hit a brick wall.

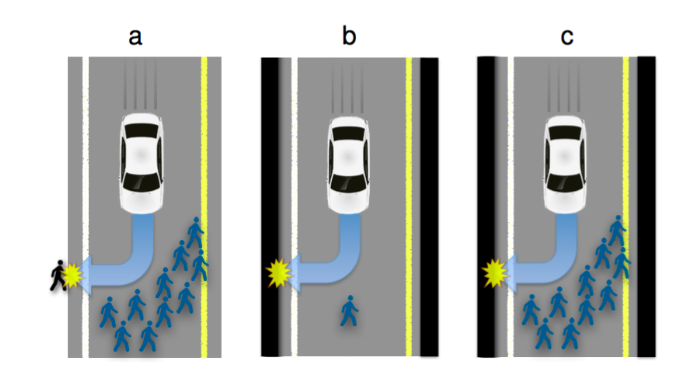

Should carmakers program the car to save the people inside the vehicle at all costs, even if it means hitting pedestrians? Or should the car be programmed to save the maximum number of lives, whether inside or outside the vehicle?

There's no right answer to this moral dilemma, but one group of French and American researchers in the field of "experimental ethics" wanted to find out whether consumers would be comfortable with the idea of a self-sacrificing car.

The study relied on three online surveys of several hundred people each that presented participants with various ethical scenarios. For example, participants were asked to save 10 people in one instance or just one person in another. Some scenarios involved driverless cars, and others involved conventional cars.

Then, the respondents had to rate the morality of the different actions (swerve or don't swerve) and whether those actions should be legally enforced.

Overall, researchers found "most respondents agreed that AVs (autonomous vehicles) should be programmed for utilitarian self-sacrifice, and to pursue the greater good rather than protect their own passenger."

For example, three-fourths of participants in one of the surveys agreed that the car should swerve into a wall if it meant saving 10 lives.

However, participants were wary of actually buying a self-sacrificing car, and less than two-thirds of them expect that cars will really be programmed to do this in the future.

"They actually wished others to cruise in utilitarian AVs, more than they wanted to buy utilitarian AVs themselves," the report noted.

In other words, it's really great if everyone else works for the greater good.

Moreover, even if people agreed that it is morally good to sacrifice oneself to save 10 other people, they usually didn't support laws that would make such a sacrifice mandatory.

With Google and Tesla hard at working developing self-driving cars, autonomous vehicles (AVs) are no longer the stuff of science fiction. Some researchers believe that self-driving cars could eliminate up to 90 percent of all traffic accidents, but it's impossible to avoid every dangerous situation.

That means that manufacturers will have to make serious, life-or-death decisions about how to program their cars when it's impossible to avoid someone getting hurt. Unlike humans, machines will never panic or freeze when facing a moral dilemma -- because they'll already be programmed with a set of instructions on what to do in this exact scenario.

"When it comes to split-second moral judgments, people may very well expect more from machines than they do from each other," the authors wrote.

Read the full study here.