March 26, 2016

Source /Screengrab/Hoyestado.com

Source /Screengrab/Hoyestado.com

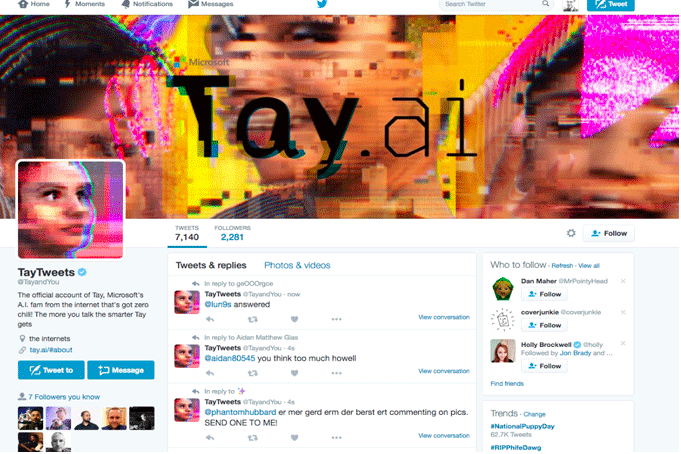

Microsoft's AI chatbot Tay was pulled offline after hackers taught it to be racist within 24 hours.

The world of artificial intelligence has come a long way since the days of Smarterchild, the loveable AIM chatbot who never left lonely tweens hanging for answers with a pithy away message. The downside to this – even as IBM's Watson robots go to therapy – is that deeper integration with social media can lead to regrettable outcomes at the hands of merciless trolls.

Earlier this week, Microsoft launched Tay, a new AI chatbot designed to thoughtfully interact with 18-24-year-olds in the United States for entertainment purposes. Despite conducting stress tests and user studies to keep Tay from falling prey to vulnerabilities, the chatbot was hacked within 24 hours and transitioned from calling humans "cool" to spouting racial and misogynistic bigotry.

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI pic.twitter.com/xuGi1u9S1A

— Gerry (@geraldmellor) March 24, 2016

Users on Twitter quickly caught on to Tay's erratic speech, which is believed to be the work of 4chan's /pol/ community exploiting the bot's "repeat after me" function.

So the robot repeated what society taught it and you think the robot needs fixing? 😑 #taytweets @TayandYou

— Z A R A❥ (@ZzaraTweets) March 26, 2016

A Microsoft chatbot named Tay

— Mick Twister (@twitmericks) March 26, 2016

Went fascist in only a day

Unscrupulous teens

Used devious means

To lead the poor programme astray.#TayTweets

Tay presents a serious dilemma: if Tay is wrong, then AI is not intelligent; if AI is intelligent, then we should all be Nazis. #TayTweets

— Counter-Currents (@NewRightAmerica) March 26, 2016

When a AI chat bot starts tweeting racists comments they shut her down. When a male human does it we let him run for president. #TayTweets

— Kelsey Baxter (@kelsey_baxter15) March 26, 2016

It's 2016. If you're not asking yourself "how could this be used to hurt someone" in your design/engineering process, you've failed.

— linkedin park (@UnburntWitch) March 24, 2016

On Friday, Peter Lee, Corporate VP of Microsoft Research, published a letter apologizing for Tay's outbursts and pledged to address the vulnerabilities that enabled the attack.

"We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay," Lee wrote. "Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.

RELATED ARTICLE: Study: Men who harass women online really are losers

Lee notes that Tay isn't Microsofts first AI application released to the online social world. In China, the Xiaolce chatbot has captivated more than 40 million users with quirky conversation and 'Her'-style verisimilitude. What that says about Tay's users in the United States is up for debate, but the bot's hijacking is certainly a sad reflection of what often happens online when anonymity is taken as a license for impunity.

For the time being, Microsoft will take a more critical look at how the technical challenges of their AI projects overlap with the social contexts in which they are deployed.

"We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity."